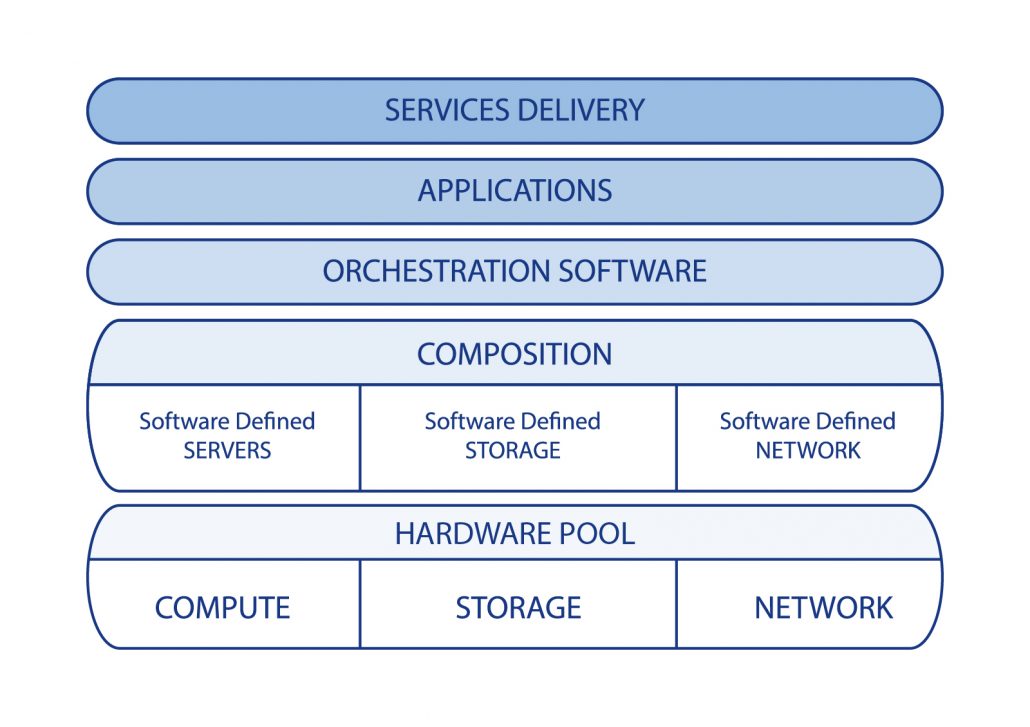

With Software Defined Infrastructure (SDI), virtualized workloads can be automated and controlled via software. They can be configured, moved, migrated, scaled and replicated independently of the underlying server, storage and network infrastructure.

By using open hardware and software, enterprises have the ability to compensate for the shortcomings of classic IT infrastructure and the public cloud by building private cloud environments perfectly adapted to their own needs. Many SDI implementations also bridge the gap between internal IT environments and external hosted, hybrid or public cloud services.

Orchestration software integrates the resource pools into centrally controlled and programmable management environments.

Network, Storage and Compute elements are abstracted into resource pools. This provides the flexibility to efficiently scale-up and opens a much broader choice of hardware options.

These disaggregated compute, storage and network hardware resources bring the possibility to upgrade them at different rates depending on demand, which reduces CapEx.

Companies are free to purchase hardware without being bound to a single manufacturer. Disaggregated resource pools also remove the need to purchase hardware ahead of current demand, avoiding hardware depreciation and taking advantage of falling hardware prices.

Policy based automation provides dynamic provisioning and service assurance as applications are optimally deployed and maintained. Dynamically provisioning resources also allows a pay-as-you-grow model and the capability to flexibly increase capacity and performance as conditions require.

Hardware

Software Defined Infrastructure runs over disaggregated servers, storage and networking blocks, merged onto a single system based on commodity hardware. Additional nodes are easily added, so configurations can be flexibly scaled out.

Failure of individual components doesn’t impede functionality of the system, as workloads can be distributed between clusters by orchestration software.

A data center might be small to start with, but can grow exponentially larger without changing the fundamental components and structures. The same disaggregated hardware blocks will be used – just more of them. From this disaggregated, scale-out approach it follows that growth of the infrastructure can be accommodated without discarding one class of hardware for another.

read more

The inherent plug-and-play capability makes easy to expand the system with minimal disruption.

Consolidating the IT infrastructure into a single virtualized system reduces the number of devices, cuts maintenance costs and ensures there are fewer potential points of failure, making systems more resilient. This approach also enables management of the whole infrastructure as a single system using a common tool set.

Using commodity hardware also implies a lower risk of being tied to a particular vendor. At Datacom we recommend the use of Open Compute hardware, which allows mixing components of different manufacturers based on a common specification.

The Open Compute Project is an initiative that has been launched in 2011 by Facebook with the purpose to design and share innovative specifications for better datacenters.

Hardware based on Open Compute specifications starts with a common rack, featuring a centralized power supply shared by all nodes in the rack. Power is distributed by a 12V bus bar on back of the rack. The power supply is composed of several modules, providing redundancy and the possibility to install just the amount of power needed at the moment. Increasing demand can be satisfied by simply plugging in additional modules. A centralized power supply is much more efficient than several smaller power supplies on each node. Also, it is not replaced and discarded when compute nodes are upgraded or storage is added to the rack.

Compute nodes are available in many options with sleds of 2x Xeon E5 in NUMA configuration or sleds of up to 4 independent Xeon D.

Storage blocks can be JBODs and JBOFs (supporting NVMe SSD disks) connected by PCIe interfaces to compute nodes, providing a highly flexible ratio of compute power to storage. Another option is to have one compute node integrated within the JBODs or JBOFs.

Split management and data planes represented by dedicated switches provide reliability, security and performance for the system.

A Software Defined Infrastructure can benefit businesses of almost any size. Smaller enterprises which currently rely on just a few servers providing database, storage and mail are at risk of loss of revenue caused by a major outage if one of those servers fails. Virtualizing and orchestrating those functions, even with only a small number of nodes, gains greater protection against system failure for the business. Large enterprises are able to reduce TCO by using optimized upgrade cycles and increased hardware efficiency. Businesses of all sizes profit from the reduced risk of vendor lock-in and the capability to scale out the infrastructure based on changing demands.

You can find more details about the Open Compute Project on the Open Compute website.

OpenStack

OpenStack is becoming the de-facto standard open source software orchestrator for building and managing cloud computing platforms.

Orchestration is at the core of an agile, efficient, cost-effective, cloud-ready data center built on a Software Defined Infrastructure.

OpenStack can reduce costs through intelligent, automated resource allocation across your compute, storage and network infrastructure.

That means less manual work for data center administrators and faster, more efficient service deployment.

read more

OpenStack is an open modular architecture based on self-contained projects, which as a whole provide a rich set of features and flexibility in the supported virtualization solutions. Among the many OpenStack components and services, we may highlight:

- Nova is the computing engine that provides virtual machines upon demand.

- Horizon is the web-based user interface for OpenStack services , where system administrators may see the status of the cloud.

- Heat provides orchestration services at OpenStack, where requirements of each cloud application are stored.

- Keystone provides authentication and authorization for OpenStack services. It contains a list of users of the OpenStack cloud versus permissions of use for the services provided by the cloud.

- Neutron provides the networking capabilities for devices managed by OpenStack services.

- In addition there are storage components, providers of virtual machines images and telemetry for billing services.

Currently OpenStack supports Virtual Machine management through several hypervisors, the most widespread being KVM and Xen, but also Microsoft’s Hyper-V and VMware’s ESXi.

However, OpenStack isn’t just virtualization, it may orchestrate bare metal also. For some workloads virtualization is not optimal. This fact is acknowledged by project Ironic, which supports bare-metal provisioning while retaining OpenStack features such as instance and image management or authentication services. Ironic integrates well with Nova and other OpenStack components.

On the Sample Configurations tab of the OpenStack website there are many examples of OpenStack use cases, which give a good idea of the capabilities in real world environments. Take a look and find out how OpenStack can also profit your company.

Software Defined Storage

Move away from proprietary storage systems and enter the Software Defined Storage (SDS) era.

SDS scalability permits flexible expansion of infrastructure one node at a time to reduce over-provisioning storage hardware such as disks and controllers. Independently scale server and storage resources to match workload demands and improve efficiency. Easily scale from 10s to 100s of TB storage deployments. Flexibly scale out and pay only as you grow.

Scale out storage differs from scale up architectures in traditional storage systems, which primarily scales by adding many individual disk drives to a single non-clustered storage controller. This approach relies on expensive proprietary hardware and is limited by the specifications of the system. In contrast, a scale out architecture uses cheaper, standard hardware managed by software, creating a distributed storage solution that can be easily scaled out by adding additional nodes.

read more

Software approach allows customers to use their own commodity hardware to create a solution based on the provided software or may also enhance the existing functions of specialized hardware. Software takes care of data integrity and provides geographic replication, against failure of individual nodes.

SDS allows the customer to allocate and share storage assets across all workloads. Without the constraints of a physical system, a storage resource can be used more efficiently and its administration can be simplified through automated management. A single software interface can be used to manage the whole shared storage pool.

Integration with orchestration controllers like OpenStack controls all aspects of the storage environment.

SDS enables enterprises to achieve the same scale-effectiveness as large scale web and public infrastructure clouds.

Network Functions Virtualization

With NFV, network functions run virtualized in software on commodity x86 hardware instead of requiring dedicated appliances.

New networking services can be deployed as soon as new VNFs (Virtual Network Functions) are available, and don’t need to wait anymore for proprietary equipment availability. This means reduced time to market and increased flexibility in definition of new services, which may expand sources of revenue for telecom operators.

With the right orchestration, fault tolerance moves from the equipment level to the system level and different availability criteria may be defined for each service. Service delivery becomes automated and allows services and networks to be more responsive to customer needs and reducing wasteful over-provisioning.

NFV is currently being employed for Routing, Firewalls, Monitoring Tools, Load Balancers, Session Border Controllers, IMS and EPC for example.